Healing Crystals - Building a protein crystal image recognition algorithm to fight COVID-19

- Grant Wiersum

- Jun 8, 2022

- 4 min read

Updated: Jun 22, 2022

Introduction: Why Crystals?

No - not the ones that keep Zordon alive...

Protein crystals are much smaller and far too delicate to lay on. There are several ways to determine the structure of a protein, but by far the most heavily relied on is X-ray crystallography (XRC). Under this scheme, a protein must be

1. Isolated in the genetic sequence,

2. That sequence must be cloned into a vector,

3. that vector must be transfected into an expression cell-line.

4. The cells have to express the protein,

5. The expressed protein must be purified to a very high degree,

6. pure protein must be crystallized, and

7. those crystals must diffract x-rays with enough resolution that

8. A useful structure can be determined from that pattern.

Learning From Failure

Every step in this process has a failure-rate but none so high as growing actual crystals. There are countless rules, heuristics and dark rituals to attempt to divine the right conditions that will yield a protein crystal, but the best method by far is to try everything. A typical protein screen can consist of about 384 conditions - 4 96-well plates. A "high-throughput" screen can be on the order of 3,000+ conditions.

Each condition needs to be monitored for the presence of crystals. This process is entirely manual, flipping through photographs of crystal drops by eye, one-by-one. Some tools have arisen to tag images to help scientists prioritize but, at the end of the day, every image must be viewed. At 3 seconds each, a single protein can take 20 minutes to 2.5 hours to evaluate. Given that a lab can have hundreds of proteins, each needing weekly screening, the situation becomes untenable. This was the situation that led the University of New York at Buffalo to search for a data-driven solution.

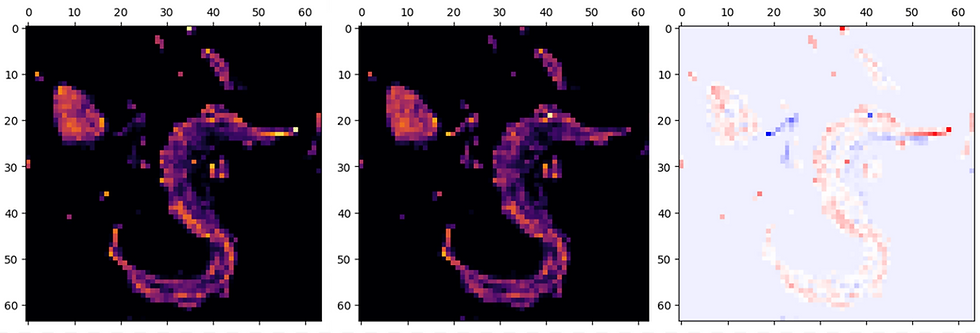

MAchine Recognition of Crystallization Outcomes was a significant attempt at building a dataset that could be used to train an AI to classify crystals. This dataset consists of 415775 images, 53044 crystals, 139865 "clear" wells with no material in them, 199567 with precipitated material and 23299 images of wells labeled "other" - usually spots of mold or phase-separation. From the MARCO set, Bruno et. al (2018) published "Classification of crystallization outcomes using deep convolutional neural networks" to PLOS One. Using an InceptionV3-based model, this Buffalo-based team was able to achieve a 94% accuracy, and 84% recall. These results far surpass the previous DeepCrystal model at 74%, approaching, if not surpassing human-parity given its speed.

So here's our benchmark. 91% recall, 9% false negatives 5.8% false positives.

This model was operationalized by Merck, and made available for download on GitHub with the POLO frontend which works beautifully: https://github.com/Merck/polo

The model itself can be downloaded, thanks to Vincent Vanhoukce at:

https://github.com/vincentvanhoucke/models/tree/master/research/marco

Here's the training curve:

From Bruno et. al:

The model is implemented in TensorFlow, and trained using an asynchronous distributed training setup across 50 NVidia K80 GPUs. The optimizer is RmsProp, with a batch size of 64, a learning rate of 0.045, a momentum of 0.9, a decay of 0.9 and an epsilon of 0.1. The learning rate is decayed every two epochs by a factor of 0.94. Training completed after 1.7M steps, in approximately 19 hours, having processed 100M images, which is the equivalent of 260 epochs. The model thus sees every training sample 260 times on average, with a different crop and set of distortions applied each time.

Data augmentation scheme used:

random selection of 599x599 region of image, randomized brightness (± 32 out of 255), randomized saturation (from 50% to 150%), randomized hue (± 0.2 out of 0.5), randomized contrast (from 50% to 150%). Random horizontal and/or vertical flip. Evaluation images were center-cropped at 599x599.

Protein Science's Next Top Model

In building a better model, a few points are noteworthy in this study. Primarily, the Inception V3 model no longer represents the state-of-the-art. Leveraging transformers, coupled with convolutional neural-networks, models like CoCa, published by Jiahui Yu and colleagues in 2022 represent 12 of the top 15 performers among ImageNet's top 1 accuracy rankings.

These architectures train on image "patches", cutting the image into pieces. This way a model can learn and tune what specifically within an image to focus its attention on. Context, in classifying crystallization drops, is particularly important, since only a very small portion of the image may contain relevant information, i.e., the crystallization drop itself. Further, other non-crystal outcomes have strong contextual cues that an AI can now be trained on.

Tensorflow now (according to example code from 2021/01/18) contains an 'extract_patches' method, based on the paper "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale" by A. Dosovitsky et al (2020) which can cut images into these subdomains for attention-based learning.

The last, and possibly most important difference that can be made is predicting not just "Crystal" vs "no crystal" but continuing on to predicting diffraction.

Specific crystal forms may diffract better or worse than others. Experienced crystallographers, like expert jewelers, know to pick up on features like size, symmetry, shape, color, clarity. Given an image, it may be possible that an AI could be trained to better classify crystallization outcomes by refocusing its effort on the task's ultimate goal - structural biology. With that goal in mind, future datasets should focus on quality, rather than the "crystal/no crystal" binary. While it's certainly useful to classify into discrete categories, it may undermine the true potential of AI to ask it to be only as good as a human. After all, isn't the point of AI to surpass our limits?

Comments